166: Metric Fu

(view original Railscast)

In this episode we’ll show you a handy gem called Metric_fu. It is a compilation of several different tools that provide reports that show which parts of your code might need extra work. If you’re looking to find out which parts of your application might need refactoring then these tools can be a great help. They also work well as part of an automated testing setup; you can automatically generate reports and evaluate your code as you write your application.

Installation

The Metric_fu gem can be installed from github. First we need to make sure that we’ve added github to your gem sources. You can find out where gem will look for gems with

gem sources

If github isn’t listed as a source it can be added by runnung

sudo gem sources -a http://gems.github.com

We can now install the gem.

sudo gem install jscruggs-metric_fu

Metric_fu will install several other dependencies unless they are already present. These include Flay, Flog, Rcov, Reek, Facets and Roodi. These are the tools that Metric_fu uses to generate its reports and which we’ll be covering a number of later.

Metric_fu provides a rake task that can be used to generate the reports and we’ll need to require the Metric_fu task in our application. We can do this by either modifying the Rakefile or by creating a separate file and putting the code in there. We’ll take the second option and create a file called metric_fu.rake in our application’s /lib/tasks directory. In this file we’ll add our require statement.

begin require 'metric_fu' rescue LoadError end

The rescue will ensure that an exception isn’t raised if the gem isn’t installed or can’t be loaded. Without it if the gem isn’t installed then running any rake task on our application will cause an error and the task will be aborted.

Configuration

The rake file we’ve just created is also the place where we can add any configuration options for Metric_fu. We can configure which tools are run and specify options for each tool, such as which of our application’s directories the tools should run against. If, for example, we only wanted to run Flog and have it just check the code under the /app directory then we could configure Metric_fu as follows.

begin

require 'metric_fu'

MetricFu::Configuration.run do |config|

config.metrics = [:flog]

config.flog = { :dirs_to_flog => ['app'] }

end

rescue LoadError

end

There are more details about the configuration options you can specify in the configuration section of the Metric_fu home page.

Running Metric-Fu

We’re going to run Metric_fu’s reports against the Railscasts codebase. This can be downloaded from github at . Once we’ve got the code we can add the metric_fu.rake file as described above and then run Metric_fu with

rake metrics:all

You might have mixed results the first time you try this and see some error messages, but they should be descriptive enough so that you can work out what has gone wrong. If you’re struggling to get it all working, then a good place to look for help is the Metric_fu Google group.

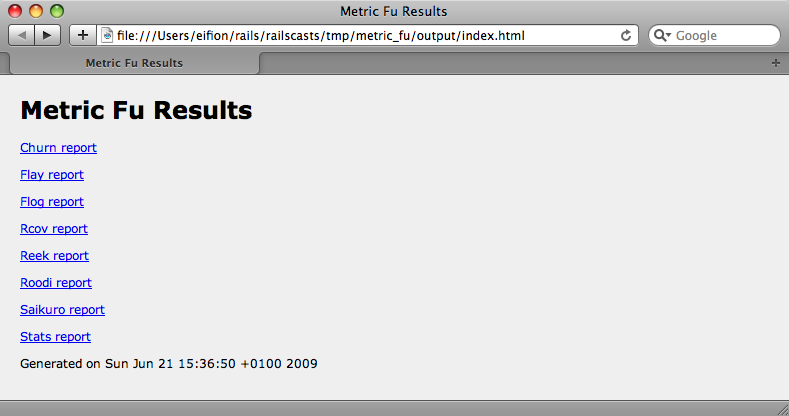

Once the rake task finished it should open a browser window containing a list of all of the reports that have been generated by each tool.

We won’t cover the output from all of the reports in this episode, but show a few examples that can help improve your code, starting with Flay.

Flay

Flay analyses your application’s code and looks for structural similarities. Running it against the Railscasts code gives the following output.

In the report above Flay has found some code that could be a good candidate for refactoring. We’ll take a look at the first match. According to Flay both the Comment and SpamReport classes have similarities at line 18 in each file. Let’s take a look at the code and see what it has found.

def matching_spam_reports

conditions = []

conditions << "comment_ip=#{self.class.sanitize(user_ip)}" unless user_ip.blank?

conditions << "comment_site_url=#{self.class.sanitize(site_url)}" unless site_url.blank?

conditions << "comment_name=#{self.class.sanitize(name)}" unless name.blank?

SpamReport.scoped(:conditions => conditions.join(' or '))

end

The matching_spam_reports method in comment.rb.

def matching_comments

conditions = []

conditions << "user_ip=#{self.class.sanitize(comment_ip)}" unless comment_ip.blank?

conditions << "site_url=#{self.class.sanitize(comment_site_url)}" unless comment_site_url.blank?

conditions << "name=#{self.class.sanitize(comment_name)}" unless comment_name.blank?

Comment.scoped(:conditions => conditions.join(' or '))

end

The matching_comments method in spam_report.rb.

Both of the methods above are similar, but not identical. This is one of the clever features of Flay: it doesn’t just check for duplicated code, it also finds similarities in your code. The two methods above are similar enough that they could possibly be refactored into a new method, but there are enough differences between them to argue that they should be left separate.

Flog

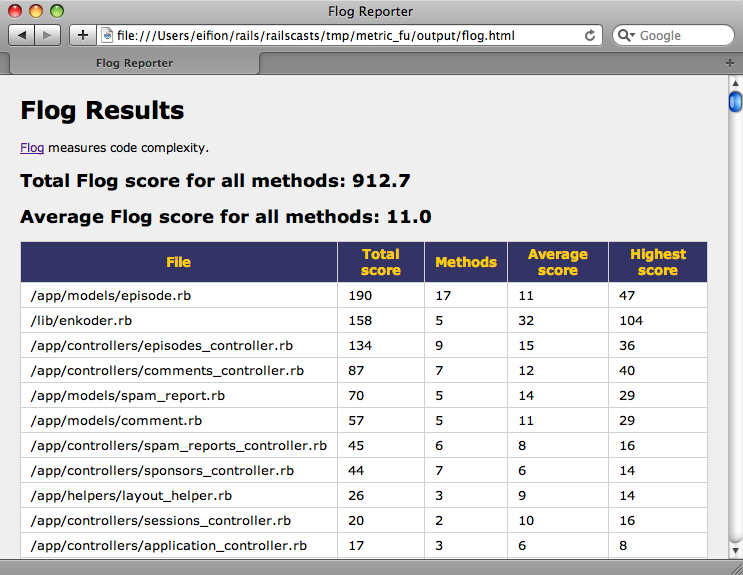

The next tool we’ll look at is Flog, which measures code complexity.

The report Flog generates lists each file in your application in order of its complexity. It’s worth scrolling though the report and looking at the files that score highly in the Highest Score column. In the Railscasts code the highest mark is given to enkoder.rb, which is an external library, and can be ignored. The second highest mark is given to the Episode model class. If we look further down the report we’ll see that Flog also provides a report on each class and its methods so that we can see which part of Episode is scoring highly.

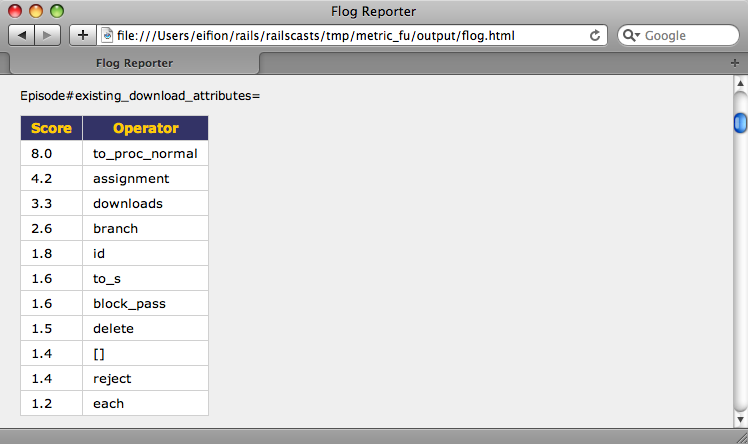

One of Episode’s methods, the existing_download_attributes setter, has quite a high score, so we’ll take a look at its code.

def existing_download_attributes=(download_attributes)

downloads.reject(&:new_record?).each do |download|

attributes = download_attributes[download.id.to_s]

if attributes

download.attributes = attributes

else

downloads.delete(download)

end

end

end

As the score reflects, this method is fairly complicated and could be a good candidate for refactoring.

Rcov

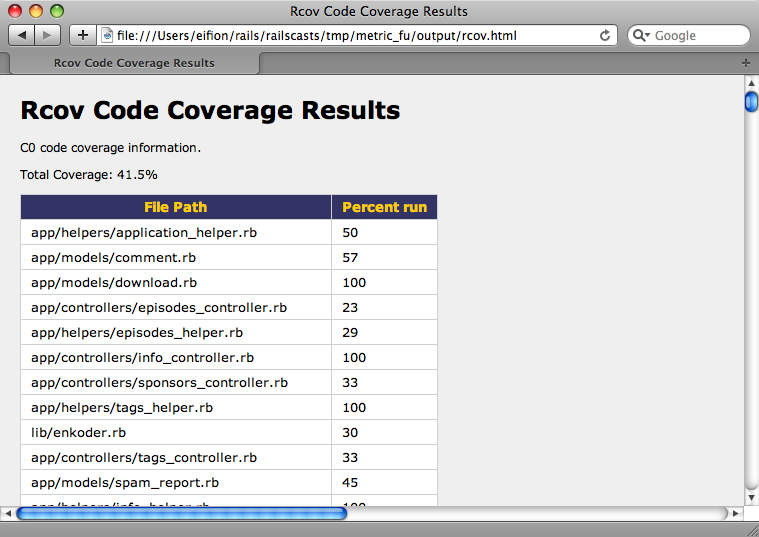

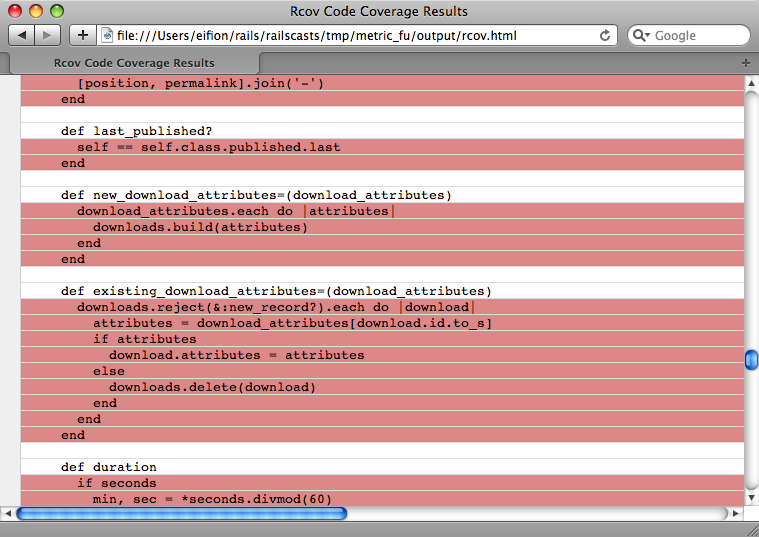

Unlike Flog and Flay which look at the quality of your code, Rcov examines your tests. Specifically it reports on how much of your application’s code is covered by tests.

There can be problems when using Rcov from Metric_fu as it can sometimes skip files. If you find that this is happening then Rcov can always be run separately instead. In the report the model class episode.rb scores less than 100% and if we scroll down the report we’ll see the uncovered code outlined in red. Untested code could contain all sorts of errors that we don’t know about so Rcov is a valuable tool for making sure that as much of your code as possible is covered by tests.

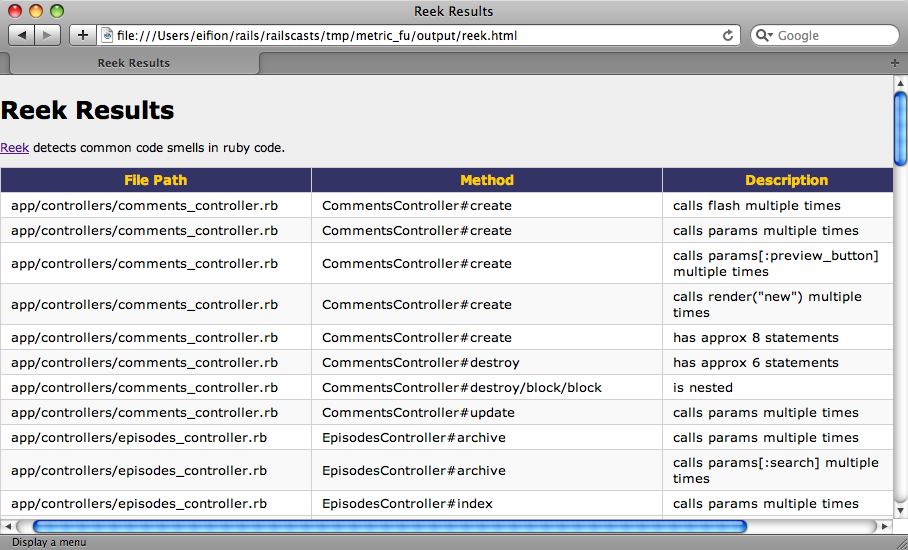

Reek

Reek is a similar tool to Flay in that it looks for parts of your code that need work. It detects “code smells” in your application and reports on them. Common smells include code duplication, long methods and repeated calls. Reek provides a useful description of each problem which should make it easy for you to find them and refactor the offending code.

Saikuro

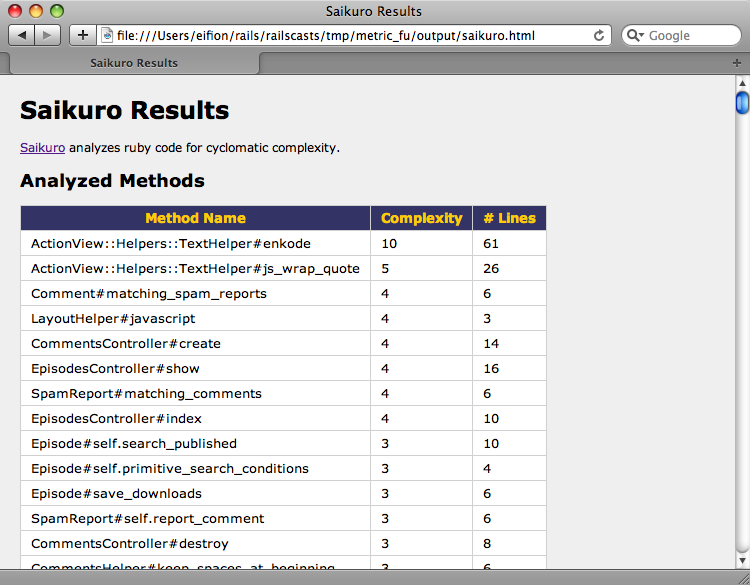

The last report we’ll look at is the one provided by Saikuro.

Saikuro is similar to Flog in that it analyses the complexity of your code. As well as giving each method a score it usefully shows the number of lines in each method in your application which is a good way of finding the methods that may need refactoring.

A Pinch of Salt

While all of the reports above provide useful information about the state of your Rails applications it’s important to remember not to take things too far and to try to make your code “perfect”. Code analysis isn’t an exact science and none of the tools shown are perfect in themselves, so they should all be used as an aid to help you find the areas of your code that might need some work not as an absolute instruction that you must refactor a part of your application.

Automation

Finally, if you’re using a continuous integration tool such as CruiseControl, you can integrate Metric_fu so that its reports are generated automatically. For more details see the Usage section of the Metric_fu home page.